AI, ChatGPT, In Video Production Is Here

Adobe, Blackmagic Design, and Others Are Transforming Video Production with Cutting-edge Tools and Reimagining Creative Potential

It's been 6 months since I last wrote something for Feed the Sensor and 2 different states (Oregon and Washington) that I've lived in. I've been busy! But I want to take a moment to thank the seven people who've subscribed in the interim period. Smaller than a baker's dozen, y'all give me motivation to write and I appreciate you being here. Thank you!

I look forward to creating more authentic content for Feed the Sensor. My goal for this site is for it to become a haven for human written reviews and articles on tech juxtaposed against a world of content and comments written by AI. When you come here, you'll only read genuine observations and experiences. If you, one of the seven, would like to support this future for Feed the Sensor, share this around!

Speaking of AI, ChatGPT is huge. If you live out in the wilderness and the word "wireless" makes you think of a tent without tie-downs to keep it up, I envy you. There is an arms race among the biggest tech companies for artificial intelligence dominance. Call them chatbots or prompt services, large language models like OpenAI's ChatGPT and Google's Bard are replacing many of the functions of search engines and, sadly, whole creative departments at companies. I've already heard stories of whole writing departments getting axed, their replacements being the AI minds of Jasper.ai or Byword.ai.

In the video production industry, we are getting quite a few new tools at the expense of copywriters, graphic designers, and editors. Where you'd be hiring a writer to come up with a script for a shoot, now you're looking at dropping a few prompts into a chatbot to get a slew of mostly well-written scripts that need a few revisions. Graphic designers have to compete with Midjourney and motion graphics designers have to deal with Runway.

Just take a look at Midjourney's showcase section. It's astounding.

Visual effects artists should be paying attention to Wonder Dynamics. The service can do body motion capture, lighting and compositing, and a bunch of other incredible things. Their demo video shows actors getting swapped out with 3D model assets, no motion capture gear needed.

All designers and VFX artists will soon have to compete with Adobe Firefly. In Firefly, you're given a popup chat bar. Type into it what you want done to a selected part of your composition. Do you want to make it look like it's snowing? Do you want to remove a children's slide from a shot? Ask Firefly and like magic it'll do as it is told. I am so curious to see the direction that this takes inside Premiere Pro.

Editors are probably already aware of the dozens of companies out there promising their service can generate video content from bare blog posts. You've got Synthesia.io, Steve.ai, and Pictory.ai, to name three, though at this point the quality of their content is mediocre at best (think late 2010's boilerplate "Happy New Year" animations with less than 5 likes on Facebook business pages but for video content).

Though those video generating services produce "meh" content right now, what will they produce in a few months? A year? The other day, I dropped a transcript of an interview from a corporate project into ChatGPT and asked it to generate a video in XML format based on the transcript. And you know what? It almost did it. The times were off, but there was the semblance of a rough cut. How long will it be before AI companies combine the XML creation of ChatGPT with object recognition for B roll to create fully-edited, delivery ready content?

I want to take the positive approach here; AI is incredible and the benefits that it can provide to filmmakers are incredible. Rather than offer just one video deliverable to a client, we can offer any number of deliverables based on criteria like target audience, length, or even "vibe." Within seconds, we could have a dozen variants of a video. Clients would love this. There is a major value there.

But we're not there yet. At this moment in time, we're just starting to see the fruits of AI in our workflows. At NAB 2023, Adobe and Blackmagic Design both unveiled beta versions of their non-linear editing suites containing many new AI features.

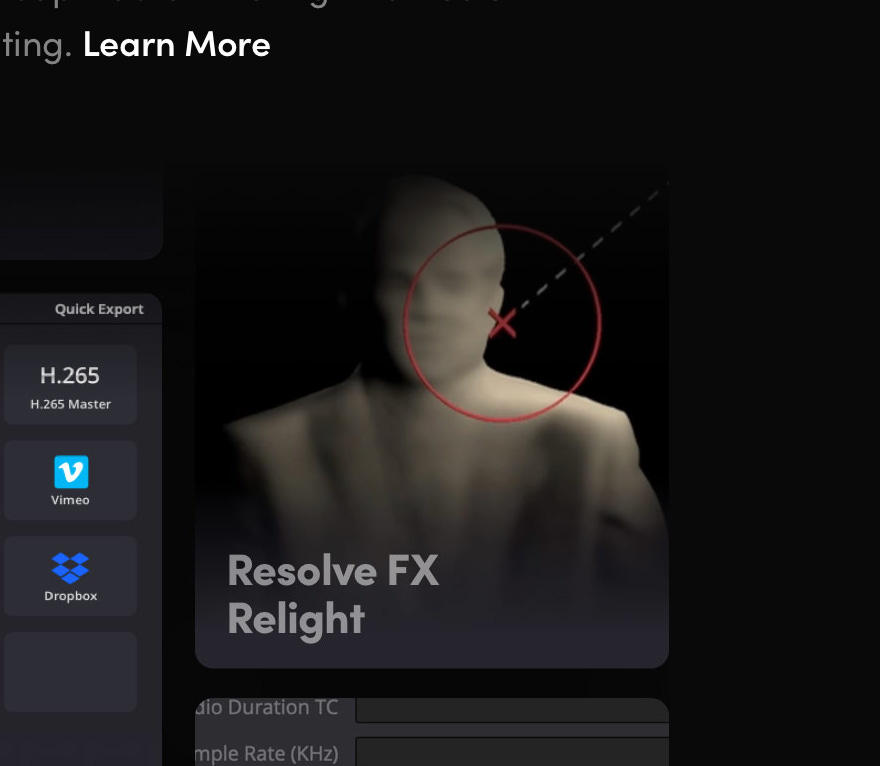

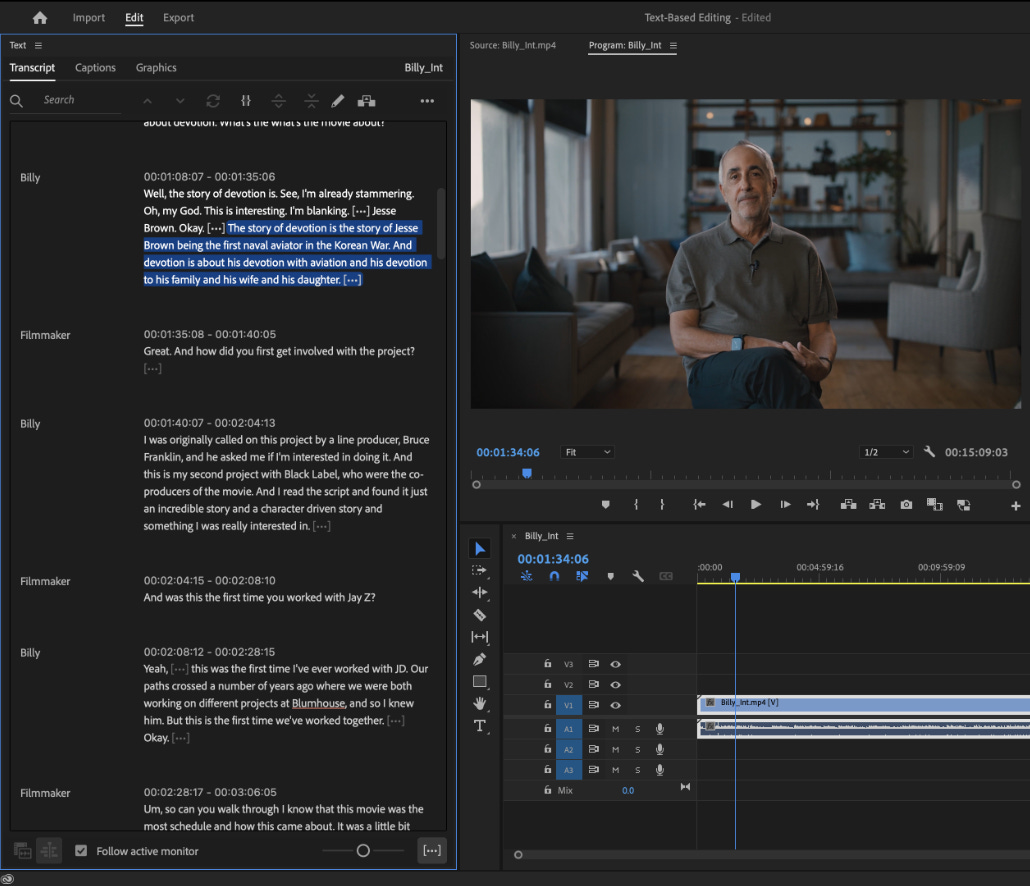

In DaVinci Resolve 18.5, the DaVinci Neural Engine brings auto-transcribing to interviews, text-based editing a la Descript to timelines, footage classification and tagging based on object recognition to clips in your folders, and scene relighting via Relight FX. With auto transcriptions, you can see the content of the interview as you edit. With text-based editing, you can select, remove, and rearrange this content just like a Word doc.

Relight FX is a stunner, allowing you to add light sources to a shot in 3D space with correct falloff characteristics based on scene analysis. Now we can more-easily say "Fix it in post!" when the gaffer wants to make an adjustment to that hair light but can't reach it without grabbing the ladder from the back of their van.

In Adobe Premiere Pro, we're getting Text-Based Editing (again like Descript) and Auto Tone Mapping. What the tone mapping feature allows you to do is sync a color profile across log footage from various cameras in a timeline, no famous YouTuber LUT needed. I'm not kidding. You can drop log clips from a Panasonic Eva-1, a Sony A7SIII, and a Canon C70 and check the box for "auto tone mapping" and all of the footage will instantly match the color space of your timeline, be it Rec709 or HDR. (Source: Newsshooter)

In the audio realm, companies like Auphonic are turning the arduous process of mixing and mastering into an experience akin to your local frozen yogurt shop. Show up; upload your raw interview audio, podcast content, or even fully-edited commercials including music to Auphonic; select a few options for your output, a sprinkle of noise reduction here, a dollop of voice EQ there; pick your metadata and output file types; and hit Upload.

Within a few minutes, Auphonic outputs incredible audio quality. I'm not talking "Oh the audio sounded like a potato before because I recorded it on my phone and now it sounds like it came from a Shure SM7B," I'm talking about the sound of a professionally mixed and mastered audio file. The clarity and presence of the voices. The reduction and gating. The ducking of music under dialogue despite uploading a flattened track. It's incredible. Auphonic is one of the first AI-driven softwares that have motivated me against hiring a contractor for the job of audio correction; to get the quality I get out of Auphonic from a contractor would be too much for the budgets on my productions.

These new AI tools aside, the "original" ChatGPT is providing a helpful hand in all steps in the production process, not just in scriptwriting. Personally, I've been using it to summarize meetings with clients, quickly generate a script for an idea for a project to see what it might feel like to produce, revise my list of interview questions for a series of interviews based on context (such as client objectives) or suggest additional questions, and assist in writing content for my company's site, Sandpiper Video.

ChatGPT right now is not connected to the internet in the same way that Bing's implementation of GPT 4 is integrated to the internet. I use Bing now for one particular task: before I have a meeting with a potential client, I'll ask it to brief me on the client. I put this brief in my notes. Then I ask Bing to summarize my company and find alignment between my company's services and the client that I have a meeting with. The resulting bulleted list of projects that we can do together more often than not contains a few things that I didn't think of when preparing for the meeting.

I would say the generative capability of ChatGPT has been most beneficial for me in day-to-day video production tasks. Be it interview questions, scripts, or client meeting info, I am doing way more than I could have back in, when was it, October of 2022? I caught wind of it back in December of 2022 after listening to Michael Barbaro and Kevin Roose worry over Sydney on the The Daily.

Being alive to witness the rise of AI is something special.

How are you using AI at the moment in your work?

I'd love to see a tool for the interviewer that in real time transcribes the conversation, suggests questions for the interviewer in the context of a set of marketing objectives or prewritten script, and builds a shotlist based on interviewee answers.

I could also see another tool like a "virtual AD" for "AI-enhanced callsheets and schedules" that optimize as the day goes on to keep the director/producer/DoP from pushing people into OT.

If you enjoyed this post or found value in it, you can buy me a coffee or subscribe below. Thanks!